When

there is a performance problem on a SQL Server, we

normally rush to treat the symptoms that are impacting the performance.

While some measures can definitely help alleviate current issues

temporarily, the performance issues will tend to come back again with

different symptoms and in a different form. When the underlying infrastructure is suboptimal or if it has

any misconfigurations, the issues will continue to persist forever.

I/O bottleneck and SQL Server symptoms: SQL Server is an I/O intensive application and as a result, the I/O subsystem requires most appropriate and optimal configurations to be able to handle the demanding workload. If the configurations are suboptimal, then we will end up observing some combination of the following symptoms on a SQL Server indicating I/O performance issues:

- Disk latency and I/O throughput (Average Disk Queue Length, Disk Sec/Transfer, IOPS)

- I/O wait types (IO_COMPLETION, ASYNC_IO_COMPLETION, PAGEIOLATCH_**, WRITELOG)

- tempdb contention (LATCH_**), either sporadic or long duration

- High CPU usages and increased Processor Queue Length

- Slow query execution and decreasing query response time

- Concurrency problem and application time-out

- Observing lock contention (LCK_M_**) and SQL blocking

- Memory pressure (RESOURCE_SEMAPHORE), Swapping and Paging activities

- Reduction of Network Throughput (ASYNC_NETWORK_IO, MB/Sec, Packet/Sec)

A famous I/O alert from Storage system to SQL Server: DBA who are managing a SQL Server on a physical or virtual environment are familiar with the following I/O alert. This alert arises due to limitation of SAN’s queue depth or misconfiguration of the VM and VMDK file.

SQL Server has encountered 10 occurrence(s) of I/O requests taking longer than 15 seconds to complete on file [E:\ProdData\prod_data_04.ndf] in database id 6. The OS file handle is 0x000000000000134C. The offset of the latest long I/O is: 0x00003afe460000. The duration of the long I/O is: 25274 ms.

SQL Server has encountered 22 occurrence(s) of I/O requests taking longer than 15 seconds to complete on file [T:\tempdb\tempdb_03.ndf] in database id 2. The OS file handle is 0x00000000000010E8. The offset of the latest long I/O is: 0x0000001d230000.

An I/O optimized VMWare VM: When building a virtual machine (VM) for SQL Server, we should give attention to the VMFS Data Store, Storage Controller, VMDK file and their configurations. The following are a few areas where everyone should place importance when designing I/O infrastructure for a SQL Server.

SCSI Controller and Paravirtualized SCSI Driver (PVSCSI): PVSCSI is the high-performance native driver for VMWare VM that is also the widely-recommended driver to use with all SQL Server deployments in order to improve I/O throughput, to lower I/O latency and reduce the number of CPU cycles consumed. Using a PVSCSI driver will improve I/O throughput by up to 12% and reduce CPU usage by up to 30%.

VMFS datastore and I/O isolation: SQL Server has two types of I/O patterns: Random and Sequential, with different block sizes varying from 512 bytes to 8MB. Due to this fact, the VMFS Data Store needs to be isolated based on the random and sequential I/O patterns in the storage system. Here is an example of a possible database isolation:

- sql_windows_datastore: for Windows and SQL Server binary files.

- sqldata_datastore_01: for data file – optimized for random I/O

- sqldata_datastore_02: for Index, Columstore – optimized for sequential I/O

- sqllog_datastore_01: for log file – optimized for sequential I/O

- sqltempdb_datastore: for tempdb data and tempdb log file

SCSI Controller: Currently, there are four commonly used Storage Controllers in ESXi for VM and each has different use cases:

- LSI Logic Parallel - Legacy driver for backward compatibility with older Operating Systems.

- LSI Logic SAS – This is the default option for a VM which will work in most Operating systems.

- VMWare Paravirtual – Paravirtualized SCSI controller developed to enhance performance in all recent Operating Systems that support the latest VMware Tools.

- NVMe Controller – It is the preferred option if the underlying storage system is based on SSD and NVMe. However, this controller can be used regardless of the underlying storage system for a VM created on ESXi 6.5 and later.

Each VM can have a maximum of two IDE controllers, four SATA controllers, four SCSI controllers and four NVMe controllers and each storage controller can hold up to 15 VMDF files. While creating a VM for SQL Server, it is important to align each SCSI controller to the intended Data Store that was previously created in the VMFS datastore in ESXi. A good example for an I/O intensive workload would be the following:

- SCSI Controller 0, scsi(0:0): sql_windows_datastore

- SCSI Controller 1, scsi(1:0): sqldata_datastore_01

- SCSI Controller 1, scsi(1:1): sqldata_datastore_02

- SCSI Controller 2, scsi(2:0): sqllog_datastore_01

- SCSI Controller 2, scsi(2:1): sqltempdb_datastore, and so on.

It is not recommended for a SQL Server I/O operation to use a single SCSI Controller to hold all VMDK files. A bad example is the following:

- SCSI Controller 0, scsi(0:0): sql_windows_datastore

- SCSI Controller 0, scsi(0:1): sqldata_datastore_01

- SCSI Controller 0, scsi(0:2): sqldata_datastore_02

- SCSI Controller 0, scsi(0:3): sqllog_datastore_01

- SCSI Controller 0, scsi(0:4): sqltempdb_datastore

The storage controller for the OS, Page File and Backup can be LSI Logic SAS or VMWare Paravirtual.

Storage Controller Queue Depth: LSI Logic SAS SCSI Controller is not an optimal choice for a SQL Server implementation as it has only 32 Queue Depth which is insufficient for SQL Server I/O. On the other hand, the Paravirtualized SCSI Controller (PVSCSI) has 64 Queue Depth and can be configured up to 254. It is highly recommended to use multiple PVSCSI SCSI controllers for SQL Server and spread out the data, index, log and tempdb files across the controllers.

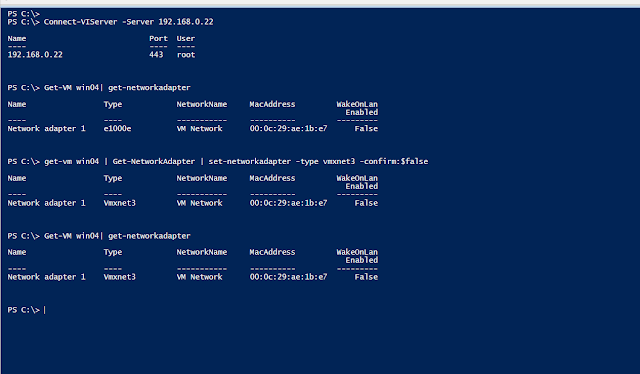

As PVSCSI is not native to Windows, VMTools must be installed. An additional step will also be to create a Windows Registry key to reconfigure the Queue Depth for PVSCSI. Also note that the VMXNET3 network adaptor must present in the VM to take advantage of the I/O eco-system.

Following are two approaches to configuring Queue Depth for PVSCSI on a VM:

Using CMD prompt: Run the following command on the VM to create the required Registry key and the associated values.

REG ADD HKLM\SYSTEM\CurrentControlSet\services\pvscsi\Parameters\Device /v DriverParameter /t REG_SZ /d "RequestRingPages=32,MaxQueueDepth=254"Using PowerShell:

Get-Item -Path “HKLM:\SYSTEM\CurrentControlSet\services\pvscsi\Parameters\Device” New-Item -Path “HKLM:\SYSTEM\CurrentControlSet\services\pvscsi\Parameters\Device” Set-ItemProperty -Path “HKLM:\SYSTEM\CurrentControlSet\services\pvscsi\Parameters\Device” -name DriverParameter -value “RequestRingPages=32,MaxQueueDepth=254” | Out-Null Get-Item -Path “HKLM:\SYSTEM\CurrentControlSet\services\pvscsi\Parameters\Device”

NTFS Allocation Unit Size: The default NTFS Allocation Unit size is 4K for all volumes of up to 16TB. The SQL Server volumes or mount points which will hold SQL Server data files, log files, tempdb files must be formatted with 64K. For Windows OS, the application binary drive 4K is appropriate and does not require any changes. While formatting a drive, make sure that the “Quick Format” option has not been selected.

To check the NTFS Allocation unit, run the following command:

- C:\> fsutil fsinfo ntfsinfo e:

- C:\> fsutil fsinfo ntfsinfo f:, and so on

Using PowerShell:

$server ='YourSQL_Server' Get-CimInstance -ComputerName $server -ClassName Win32_Volume ` | where-object {$_.DriveLetter -gt ''} ` | Sort-Object DriveLetter ` | select DriveLetter, FileSystem, BootVolume, Blocksize

Partition Alignment: Starting with Windows 2008, all partition offsets are aligned to a 1MB (1024KB or 1048576 bytes) boundary. If the VMDK file is created using the vSphere vCenter, then the portion alignment issue is unlikely to exist. However, partition alignment must be verified and if there is a misalignment, then the partition must be re-created and reformatted to align with a 1MB starting offset or a vendor recommended offset.

To check partition alignment, run the any of the following command:

- C:\> wmic partition get Name, BlockSize, StartingOffset, Index

$server ='YourSQL_Server' Get-CimInstance -ComputerName $server -ClassName Win32_DiskPartition ` | sort-object Name ` | select Name, BlockSize, StartingOffset, Index

Thick Provisioned Eagerly Zeroed VMDK: For a heavy write-intensive SQL Server, it is recommended to use the “Thick Provisioned Eagerly Zeroed” VMDK disk. This will essentially eliminate the penalty of zeroing out the blocks at its first write. If the SQL Server workload is mostly read-oriented, then using “Thin Provisioned” will be sufficient and there will be no noticeable degradation in I/O performance.

Power Configuration setting on VM: The Power setting of a SQL Server VM must be in “High Performance” mode. Conserving the power of a VM leads to CPU throttling, which leads to a severe negative impact on application performance and I/O throughput.

Monitoring I/O Performance for a VM:The following are the four common I/O metrics used to measure performance:

- GAVG (Guest Average Latency) - Total Latency, this is the amount of time it takes for an I/O to be completed, after it leaves the VM and until it is acknowledged back.

- KAVG (Kernel Average Latency) - Time an I/O request spent waiting inside the vSphere storage stack.

- DAVG (Device Average Latency) - Latency coming from the physical hardware, HBA and Storage device.

- QAVG (Queue Average Latency) - Time spent waiting in a queue inside the vSphere Storage Stack.

VMWare recommends that the DAVG, KAVG and GAVG metrics should not exceed more than 10 milliseconds for a sustained period of time and the QAVG should not exceed 1 millisecond. Take a look at this article https://virtunetsystems.com/how-does-queue-depth-affect-latency-and-iops-in-vmware/.

|

| Taken from How does Queue Depth affect latency and IOPS in VMware? |

|

| Using ESXTOP to examine I/O performance |

Recommendations in a Nutshell: As per VMware and Microsoft recommended best practices, a SQL Server on a VM should be configured as follows for optimal I/O performance:

- VMFS Data Store and VMDK file based on Random and Sequential I/O patterns

- Paravirtualized SCSI Controller (PVSCSI) and Paravirtualized Network Adaptor (VMXNET3) for high throughput

- Using multiple SCSI Controllers for the VM to allow more I/O to pass to the storage system

- Reconfiguration of PVSCSI’s queue depth, up to 254

- Use 64K NTFS Allocation Unit for data and log files on all volume and mount points without the “Quick Format” option

- For high write-intensive SQL Servers, use “Think provisioned eager zero” type, otherwise use “Thin provisioned” VMDK

- Ensure “Patrion Alignment” is accurate

- Use “High Performance” power setting

Reference:

- Configuring disks to use VMware Paravirtual SCSI (PVSCSI) controllers (1010398): https://kb.vmware.com/s/article/1010398

- Architecting Microsoft SQL Server on VMware vSphere: https://core.vmware.com/resource/architecting-microsoft-sql-server-vmware-vsphere#esxi-host-configuration

- VMWare Press: Virtualizing SQL Server with VMWare (Doing it right):

- Troubleshoot slow SQL Server performance caused by I/O issues: https://learn.microsoft.com/en-us/troubleshoot/sql/database-engine/performance/troubleshoot-sql-io-performance