Reviewing the SQL Server Errorlog is a common and important task that SQL Server Database Professionals perform on a regular basis to identify and troubleshoot database server related issues. The simplistic process described in this post can prove to be a very effective method for a large number of SQL Server instances.

The process parses the Errorlog for known keywords or common phrases, then categories each event before dumping them to a SQL Server table. For visualization purposes, the open source tool Grafana (http://www.grafana,com) is used, and for wild card searches SQL Server FullText is also used.

The following PowerShell Script parses the Errorlog from multiple servers and inserts the categorized data into a SQL Server Table.

Scrips and dashboard download Link:

File: PowerShell - ProcessErrorlogMain.zip

Link: http://bit.ly/1cZNScb

Direct link: https://www.dropbox.com/scl/fo/t4uok05mplkbzt5n5d8hs/h?rlkey=r4wfnyuddbj1l6sakaw055fiv&dl=0

Common Variables used in the PowerShell scripts:

- $nInitialDays = Initial number of days to scan

- $sqlInstance = SQL Server instances

- $LastCheckpoint = Staring date and time

- $NextCheckpointTime = End date and time

- “Servers.txt” = list of SQL server instances, we need to create this file

- “Checkpoint.txt” = used to hold last scan date and time, will be created automatically

SQL Server Table for parsed data preservation:

USE [yourdbname] GO -- table for SQL Server event log CREATE TABLE [dbo].[EventTbl] ( [eventID] [bigint] IDENTITY (1, 1) NOT NULL ,[eventCreated] [datetime] NULL ,[eventServer] [varchar](50) NULL ,[eventSource] [varchar](50) NULL ,[eventType] [varchar](50) NULL ,[eventText] [varchar](8000) NULL ,CONSTRAINT [PK_EventTbl] PRIMARY KEY CLUSTERED ( [eventID] ASC ) WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY] ) ON [PRIMARY] GO -- Create a fulltext catalog CREATE FULLTEXT CATALOG [ErrorlogCatalog] WITH ACCENT_SENSITIVITY = OFF AUTHORIZATION [db_owner] -- Create fulltext index CREATE FULLTEXT INDEX ON [dbo].[EventTbl] KEY INDEX [PK_EventTbl] ON ([ErrorlogCatalog], FILEGROUP [PRIMARY]) WITH (CHANGE_TRACKING AUTO) ALTER FULLTEXT INDEX ON [dbo].[EventTbl] ADD ([eventText] LANGUAGE [English]) ALTER FULLTEXT INDEX ON [dbo].[EventTbl] ENABLE

ProcessErrorlogReview.ps1: Quick review of Errorlog from multiple servers

This PowerShell Script examines all Errorlogs within a certain time interval and displays the output. One or more server names can be passed, either by supplying the server names in a “servers.text“ file or by assigning server names directly to the variable $sqlInstances in the script.

Clear-Host Import-Module sqlserver $nInitialDays = 15 $match = '(Login|shutdown)' [DateTime]$InitialStart = $(Get-Date).AddDays(-$nInitialDays) # Load the list of server from file # $PSScriptRoot = Split-Path -Parent -Path $MyInvocation.MyCommand.Definition # $path = "$PSScriptRoot" # $sqlInstanaces = Get-Content ($path + "\Servers.txt") # SQL Server instances to collect errorlog event # Supply server name [String[]]$sqlInstanaces = "SHBMAIN","SHBMAIN\SQL2019","SHBMAIN\SQL2017" # Next check point date and time $CheckTime = Get-Date $result = @() foreach ($sqlInstanace in $sqlInstanaces) { $obj = "" | select eventCreated, eventServer,eventSource,eventType,eventText $obj = Get-SqlErrorLog -ServerInstance $sqlInstanace -After $InitialStart -Before $CheckTime -ErrorAction SilentlyContinue | ` select @{ n = "eventCreated"; e = { $_.Date } }, ` @{ n = "eventYear"; expression = { $_.Date.ToString("yyyy") } }, ` @{ n = "eventMonth"; expression = { $_.Date.ToString("MMMM") } }, ` @{ n = "eventDate"; expression = { $_.Date.ToString('MM/dd/yyyy') } }, ` @{ n = "eventTime"; expression = { $_.Date.ToString('hh:mm:ss tt') } }, ` @{ n = "eventServer"; e = { $_.ServerInstance } }, ` @{ n = "eventSource"; e = { $_.Source } }, ` @{ n = "eventType"; expression = { if ($_.'Text' -match 'DBCC CHECKDB' ) {'CHECKDB'} elseif ($_.'Text' -match 'cachestore' ) {'CacheFlush'} elseif ($_.'Text' -match 'Job Failed' ) {'JobFailed'} elseif ($_.'Text' -match '(specified in network packet|packet used to open)' ) {'Connection'} elseif ($_.'Text' -match 'Configuration' ) {'Configuration'} elseif ($_.'Text' -match 'memory' ) {'Memory'} elseif ($_.'Text' -match 'Killed' ) {'Killed'} elseif ($_.'Text' -match 'requests taking longer' ) {'I/O'} elseif ($_.'Text' -match 'FlushCache' ) {'I/O'} elseif ($_.'Text' -match 'Login failed' ) {'LoginFailed'} elseif ($_.'Text' -match 'Login succeeded' ) {'LoginSucceeded'} elseif ($_.'Text' -match 'deadlocked' ) {'Deadlock'} elseif ($_.'Text' -match 'SQL Trace ID' ) {'TraceStart'} elseif ($_.'Text' -match 'SQL Trace stopped' ) {'TraceStop'} elseif ($_.'Text' -match 'Database backed up' ) {'BackupFull'} elseif ($_.'Text' -match 'Database differential changes were backed up' ) {'BackupDifferential'} elseif ($_.'Text' -match 'Log was backed' ) {'BackupLog'} elseif ($_.'Text' -match 'system shutdown' ) {'Shutdown'} elseif ($_.'Text' -match 'RESOLVING_NORMAL' ) {'AlwaysOn'} elseif ($_.'Text' -match 'RECOVERY' ) {'DatabaseRecoveryModel'} elseif ($_.'Text' -match 'RESTORING' ) {'DatabaseRestoring'} elseif ($_.'Text' -match 'Fatal' ) {'Error'} else {'Other'}} }, ` @{ n = "eventText"; e = { $_.Text.Replace("'", "''") } }

# filter out whatever is needed # $obj = $obj | Where-Object { $_.eventType -notmatch "Other|LogBackup" } # $obj = $obj | Where-Object { $_.eventSource -match "Logon" -and $_.eventType -notmatch "Other"}

$obj = $obj | Where-Object { $_.eventType -match $match } if ($obj.Count -ge 1 ) { Write-Host "Number of records for $sqlInstanace : $($obj.count)" $result += $obj } } $result | ft

Sample output of parsed errorlog:

ProcessErrorlogMain.ps1: For automation - parsing, categorizing, preserving data and visualization

This script is used to collect events from one or more SQL Servers using a regular interval through a scheduled task and inserts the parsed data into a SQL Server Table.

How does it work?

When it runs for the first time, either through a scheduler task or a SQL Server Agent Job, the script parses data for the last 15 days, e.g. from x date to current date time. It remembers the last scan date and time, and creates a checkpoint.txt file. When the script is run again, it retrieves the last scan date and time from the checkpoint.txt file and uses it as the starting time.

The parsed data will be inserted into a SQL Server Table (EventTbl) on a SQL Server database.

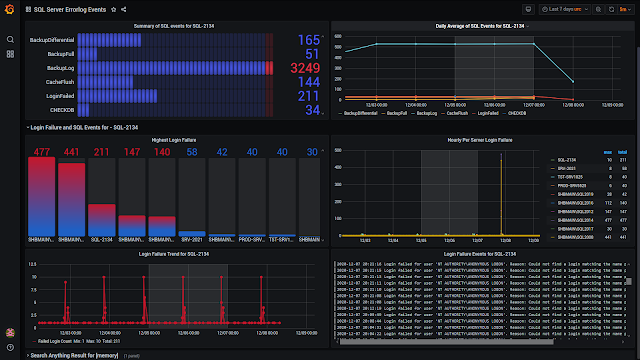

Visualization of parsed Errorlog data:

Since Grafana supports SQL Server connectivity, it will be used to visualize the data. The data source name in the dashboard is “SQLLog”.

Clear-Host Import-Module sqlserver # Database server to export results $database = 'TestDB' $server = 'SHBMAIN\SQL2019' $table = 'dbo.EventTbl' $nInitialDays = 15 [DateTime]$InitialStart = $(Get-Date).AddDays(-$nInitialDays) # Load the checkpoint file $PSScriptRoot = Split-Path -Parent -Path $MyInvocation.MyCommand.Definition $path = "$PSScriptRoot" $CheckPointFile = $path + "\Checkpoint.txt" # SQL Server instances to collect errorlog event # Supply server name in the script # [String[]]$sqlInstanaces = "SA0481V", "SA0997V", "SA0270V","SA0435" # Supply server name from a text file # List off all servers from the 'servers.txt' if (!( Test-Path ($path + "\Servers.txt") )) { Write-Host "Servers.txt does not contain any SQL Server Instances. Add SQL Servers...." -ForegroundColor Yellow Write-Host "" $null | Add-Content ($path + "\Servers.txt") return } else { $sqlInstanaces = Get-Content ($path + "\Servers.txt") -Force } # Retrieve last scan date/time from Checkpoint file if (! (Test-Path $CheckPointFile)) # Checkpoint file not found { Write-Host 'Checkpoint file does NOT exist' # first and last log scan date and time $LastCheckpoint = $InitialStart $NextCheckpointTime = Get-Date $NextCheckpointTime | Set-Content -Force $CheckPointFile } else # the Checkpoint file does exist { Write-Host 'Checkpoint file does exist' [DateTime]$LastCheckpoint = Get-Content -Force $CheckPointFile $NextCheckpointTime = Get-Date $NextCheckpointTime | Set-Content -Force $CheckPointFile } Write-Host "Staring from : $LastCheckpoint" Write-Host "Next Start: $NextCheckpointTime" Write-Host " " #$match = 'Login failed|requests taking longer|is full' #$notmatch = 'without errors|found 0 errors' # $result = @() foreach ($sqlInstanace in $sqlInstanaces) { $obj = "" | select eventCreated, eventServer,eventSource,eventType,eventText $obj = Get-SqlErrorLog -ServerInstance $sqlInstanace -After $LastCheckpoint -Before $NextCheckpointTime -ErrorAction SilentlyContinue | ` select @{ n = "eventCreated"; e = { $_.Date } }, ` @{ n = "eventYear"; expression = { $_.Date.ToString("yyyy") } }, ` @{ n = "eventMonth"; expression = { $_.Date.ToString("MMMM") } }, ` @{ n = "eventDate"; expression = { $_.Date.ToString('MM/dd/yyyy') } }, ` @{ n = "eventTime"; expression = { $_.Date.ToString('hh:mm:ss tt') } }, ` @{ n = "eventServer"; e = { $_.ServerInstance } }, ` @{ n = "eventSource"; e = { $_.Source } }, ` @{ n = "eventType"; expression = { if ($_.'Text' -match 'DBCC CHECKDB' ) {'CHECKDB'} elseif ($_.'Text' -match 'cachestore' ) {'CacheFlush'} elseif ($_.'Text' -match 'Job Failed' ) {'JobFailed'} elseif ($_.'Text' -match '(specified in network packet|packet used to open)' ) {'Connection'} elseif ($_.'Text' -match 'Configuration' ) {'Configuration'} elseif ($_.'Text' -match 'memory' ) {'Memory'} elseif ($_.'Text' -match 'Killed' ) {'Killed'} elseif ($_.'Text' -match 'requests taking longer' ) {'I/O'} elseif ($_.'Text' -match 'FlushCache' ) {'I/O'} elseif ($_.'Text' -match 'Login failed' ) {'LoginFailed'} elseif ($_.'Text' -match 'Login succeeded' ) {'LoginSucceeded'} elseif ($_.'Text' -match 'deadlocked' ) {'Deadlock'} elseif ($_.'Text' -match 'SQL Trace ID' ) {'TraceStart'} elseif ($_.'Text' -match 'SQL Trace stopped' ) {'TraceStop'} elseif ($_.'Text' -match 'Database backed up' ) {'BackupFull'} elseif ($_.'Text' -match 'Database differential changes were backed up' ) {'BackupDifferential'} elseif ($_.'Text' -match 'Log was backed' ) {'BackupLog'} elseif ($_.'Text' -match 'system shutdown' ) {'Shutdown'} elseif ($_.'Text' -match 'RESOLVING_NORMAL' ) {'AlwaysOn'} elseif ($_.'Text' -match 'RECOVERY' ) {'DatabaseRecoveryModel'} elseif ($_.'Text' -match 'RESTORING' ) {'DatabaseRestoring'} elseif ($_.'Text' -match 'Fatal' ) {'Error'} else {'Other'}} }, ` @{ n = "eventText"; e = { $_.Text.Replace("'", "''") } }

# filter out whatever is needed

$obj = $obj | Where-Object { $_.eventType -notmatch "Other"} if ($obj.Count -ge 1 ) { Write-Host "$sqlInstanace - total records $($obj.count) and exporting log to SQL Server $server" -ForegroundColor Yellow Write-Host "" $obj | ForEach-Object { Invoke-Sqlcmd ` -Database $database -ServerInstance $server ` -Query "insert into $table VALUES ( '$($_.eventCreated)', '$($_.eventServer)',` '$($_.eventSource)','$($_.eventType)','$($_.eventText)' ) " } # $result += $obj } } # $result | ft

ProcessErrorlogImport.ps1: Manually parsing and Importing of Errorlog data

This script can be used to manually import data from servers which are not listed in the “servers.txt” file. Suppose “SRV1010” is not in the “server.txt” file and we want to include this server in our scan of the last 15 days. In the script, we can assign one or more SQL Server Instances to the variable $sqlInstances, as follows:

$sqlInstances = “SRV1010”

$sqlInstances = “SRV1010”,”QRD0450\SQL2019”,”DEV4568”

Clear-Host Import-Module sqlserver ######################################################################### # Additional log import ######################################################################### # SQL Server instance to collect errorlog event [String[]]$sqlInstanaces = "SHBMAIN\SQL2012","SHBMAIN\SQL2014" # Database server to export results $database = 'TestDB' $server = 'SHBMAIN\SQL2019' $table = 'dbo.EventTbl' $nInitialDays = 15 [DateTime]$InitialStart = $(Get-Date).AddDays(-$nInitialDays) # Load the checkpoint file $PSScriptRoot = Split-Path -Parent -Path $MyInvocation.MyCommand.Definition $path = "$PSScriptRoot" $CheckPointFile = $path + "\Checkpoint.txt" # Retrieve last scan date/time from Checkpoint file if (! (Test-Path $CheckPointFile)) # Checkpoint file not found { Write-Host 'Checkpoint file does NOT exist' # first and last log scan date and time [DateTime]$LastCheckpoint = $InitialStart [DateTime]$NextCheckpointTime = Get-Date $NextCheckpointTime | Set-Content -Force $CheckPointFile } else # the Checkpoint file does exist { Write-Host 'Checkpoint file does exist' [DateTime]$LastCheckpoint = $InitialStart [DateTime]$NextCheckpointTime = Get-Content -Force $CheckPointFile } # list previously added servers $Servers = Get-Content ($path + "\Servers.txt") -Force Write-Host "Staring from : $LastCheckpoint" Write-Host "Next Start: $NextCheckpointTime" Write-Host " " # $result = @() foreach ($sqlInstanace in $sqlInstanaces) { $Process = $false if ($Servers -match $sqlInstanace.Replace("\", "\\")) { $Process = $false Write-Host "$sqlInstanace - does exist in server.txt file" -ForegroundColor Cyan } else { $Process = $true Write-Host "$sqlInstanace - is new and including in server.txt file" -ForegroundColor Yellow $sqlInstanace | Add-Content ($path + "\Servers.txt") } if ($Process) { Write-Host "$sqlInstanace - processing errorlog" -ForegroundColor Yellow $obj = "" | select eventCreated, eventServer, eventSource, eventType, eventText $obj = Get-SqlErrorLog -ServerInstance $sqlInstanace -After $LastCheckpoint -Before $NextCheckpointTime -ErrorAction SilentlyContinue | ` select @{ n = "eventCreated"; e = { $_.Date } }, ` @{ n = "eventYear"; expression = { $_.Date.ToString("yyyy") } }, ` @{ n = "eventMonth"; expression = { $_.Date.ToString("MMMM") } }, ` @{ n = "eventDate"; expression = { $_.Date.ToString('MM/dd/yyyy') } }, ` @{ n = "eventTime"; expression = { $_.Date.ToString('hh:mm:ss tt') } }, ` @{ n = "eventServer"; e = { $_.ServerInstance } }, ` @{ n = "eventSource"; e = { $_.Source } }, ` @{ n = "eventType"; expression = { if ($_.'Text' -match 'DBCC CHECKDB' ) {'CHECKDB'} elseif ($_.'Text' -match 'cachestore' ) {'CacheFlush'} elseif ($_.'Text' -match 'Job Failed' ) {'JobFailed'} elseif ($_.'Text' -match '(specified in network packet|packet used to open)' ) {'Connection'} elseif ($_.'Text' -match 'Configuration' ) {'Configuration'} elseif ($_.'Text' -match 'memory' ) {'Memory'} elseif ($_.'Text' -match 'Killed' ) {'Killed'} elseif ($_.'Text' -match 'requests taking longer' ) {'I/O'} elseif ($_.'Text' -match 'FlushCache' ) {'I/O'} elseif ($_.'Text' -match 'Login failed' ) {'LoginFailed'} elseif ($_.'Text' -match 'Login succeeded' ) {'LoginSucceeded'} elseif ($_.'Text' -match 'deadlocked' ) {'Deadlock'} elseif ($_.'Text' -match 'SQL Trace ID' ) {'TraceStart'} elseif ($_.'Text' -match 'SQL Trace stopped' ) {'TraceStop'} elseif ($_.'Text' -match 'Database backed up' ) {'BackupFull'} elseif ($_.'Text' -match 'Database differential changes were backed up' ) {'BackupDifferential'} elseif ($_.'Text' -match 'Log was backed' ) {'BackupLog'} elseif ($_.'Text' -match 'system shutdown' ) {'Shutdown'} elseif ($_.'Text' -match 'RESOLVING_NORMAL' ) {'AlwaysOn'} elseif ($_.'Text' -match 'RECOVERY' ) {'DatabaseRecoveryModel'} elseif ($_.'Text' -match 'RESTORING' ) {'DatabaseRestoring'} elseif ($_.'Text' -match 'Fatal' ) {'Error'} else {'Other'}} }, ` @{ n = "eventText"; e = { $_.Text.Replace("'", "''") } }

# filter out whatever is needed # $obj = $obj | Where-Object { $_.eventType -notmatch "Other|LogBackup" } # $obj = $obj | Where-Object { $_.eventSource -match "Logon" -and $_.eventType -notmatch "Other"}

$obj = $obj | Where-Object { $_.eventType -notmatch "Other" } if ($obj.Count -ge 1) { Write-Host "$sqlInstanace - total records $($obj.count) and exporting to SQL Server $server" Write-Host " " $obj | ForEach-Object { Invoke-Sqlcmd ` -Database $database -ServerInstance $server ` -Query "insert into $table VALUES ( '$($_.eventCreated)','$($_.eventServer)',` '$($_.eventSource)','$($_.eventType)','$($_.eventText)' ) " } # $result += $obj } } } Write-Host " " # $result | ft

Conclusion:

This script can be fine-tuned to fit your specific requirements. You can add other filters to categorize SQL events, such as Service Broker, data file full, and not enough disk space and so on. I hope this post helped you get a good start to processing SQL Server events from Errorlog.